Generative AI and intellectual property

Length: • 7 mins

Annotated by Ayush Chaturvedi

We’ve been talking about intellectual property in one way or another for at least the last five hundred years, and each new wave of technology or creativity leads to new kinds of arguments. We invented performance rights for composers and we decided that photography - ‘mechanical reproduction’ - could be protected as art, and in the 20th century we had to decide what to think about everything from recorded music to VHS to sampling. Generative AI poses some of those questions in new ways (or even in old ways), but it also poses some new kinds of puzzles - always the best kind.

At the simplest level, we will very soon have smartphone apps that let you say “play me this song, but in Taylor Swift’s voice”. That’s a new possibility, but we understand the intellectual property ideas pretty well - there’ll be a lot of shouting over who gets paid what, but we know what we think the moral rights are. Record companies are already having conversations with Google about this.

But what happens if I say “make me a song in the style of Taylor Swift” or, even more puzzling, “make me a song in the style of the top pop hits of the last decade”?

A person can’t mimic another voice perfectly (impressionists don’t have to pay licence fees) but they can listen to a thousand hours of music and make something in that style - a ‘pastiche’, we sometimes call it. If a person did that, they wouldn’t have to pay a fee to all those artists, so if we use a computer for that, do we need to pay them? I don’t think we know how we think about that. We might know what the law might say, but we might want to change that.

Similar problems come up in art, and also some interesting cultural differences. If I ask Midjourney for an image in the style of a particular artist, some people consider this obvious and outright theft, but if you chat to the specialists at Christie’s or Sotheby’s, or wander the galleries of lower Manhattan or Mayfair, most people there will not only disagree but be perplexed by the premise - if you make an image ‘in the style of’ Cindy Sherman, you haven't stolen from her and no-one who values Cindy Sherman will consider your work a substitute (except in the Richard Prince sense). I know which I agree with, but that isn’t what matters. How did we reach a consensus about sampling in hip hop? Indeed, do we agree about Richard Prince? We’ll work it out.

Let’s take another problem. I think most people understand that if I post a link to a news story on my Facebook feed and tell my friends to read it, it’s absurd for the newspaper to demand payment for this. A newspaper, indeed, doesn’t pay a restaurant a percentage when it writes a review. If I can ask ChatGPT to read ten newspaper websites and give me a summary of today’s headlines, or explain a big story to me, then suddenly the newspapers’ complaint becomes a lot more reasonable - now the tech company really is ‘using the news’. Unsurprisingly, as soon as ChatGPT announced that it had its own web crawler, news sites started blocking it.

But just as for my ‘make me something like the top ten hits’ example, ChatGPT would not be reproducing the content itself, and indeed I could ask an intern to read the papers for me and give a summary (I often describe AI as giving you infinite interns). That might be breaking the self-declared terms of service, but summaries (as opposed to extracts) are not generally considered to be covered by copyright - indeed, no-one has ever suggested this newsletter is breaking the copyright of the sites I link to.

Does that mean we’ll decide this isn’t a problem? The answer probably has very little to do what that today’s law happens to say today in one or another country. Rather, one way to think about this might be that AI makes practical at a massive scale things that were previously only possible on a small scale. This might be the difference between the police carrying wanted pictures in their pockets and the police putting face recognition cameras on every street corner - a difference in scale can be a difference in principle. What outcomes do we want? What do we want the law to be? What can it be?

But the real intellectual puzzle, I think, is not that you can point ChatGPT at today’s headlines, but that on one hand all headlines are somewhere in the training data, and on the other, they’re not in the model.

OpenAI is no longer open about exactly what it uses, but even if it isn’t training on pirated books, it certainly uses some of the ‘Common Crawl, which is a sampling of a double-digit percentage of the entire web. So, your website might be in there. But the training data is not the model. LLMs are not databases. They deduce or infer patterns in language by seeing vast quantities of text created by people - we write things that contain logic and structure, and LLMs look at that and infer patterns from it, but they don’t keep it. So ChatGPT might have looked at a thousand stories from the New York Times, but it hasn’t kept them.

Moreover, those thousand stories themselves are just a fraction of a fraction of a percent of all the training data. The purpose is not for the LLM to know the content of any given story or any given novel - the purpose is for it to see the patterns in the output of collective human intelligence.

That is, this is not Napster. OpenAI hasn’t ‘pirated’ your book or your story in the sense that we normally use that word, and it isn’t handing it out for free. Indeed, it doesn’t need that one novel in particular at all. In Tim O’Reilly’s great phrase, data isn’t oil; data is sand. It’s only valuable in the aggregate of billions,, and your novel or song or article is just one grain of dust in the Great Pyramid. OpenAI could retrain ChatGPT without any newspapers, if it had to, and it might not matter - it might be less able to answer detailed questions about the best new coffee shops on the Upper East Side of Manhattan, but again, that was never the aim. This isn’t supposed to be an oracle or a database. Rather, it’s supposed to be inferring ‘intelligence’ (a placeholder word) from seeing as much as possible of how people talk, as a proxy for how they think.

On the other hand, it doesn’t need your book or website in particular and doesn’t care what you in particular wrote about, but it does need ‘all’ the books and ‘all’ the websites. It would work if one company removed its content, but not if everyone did.

If this is, at a minimum, a foundational new technology for the next decade (regardless of any talk of AGI), and it relies on all of us collectively acting as mechanical turks to feed it (even if ex post facto), do we all get paid, or do we collectively withdraw, or what? It seems somehow unsatisfactory to argue that “this is worth a trillion dollars, and relies on using all of our work, but your own individual work is only 0.0001% so you get nothing.” Is it adequate or even correct to call this “fair use”? Does it matter, in either direction? Do we change our laws around fair use?

In the end, it might not matter so much: the ‘Large’ in ‘Large Language Models’ is a moving target. The technology started working because OpenAI threw orders of magnitude more data into the hopper than anyone thought reasonable and great results came out of the other end, but we can’t add orders of magnitude more data again, because there genuinely isn’t that much more data left. Meanwhile, the cost and scale of these things means that a large part of the research effort now goes into getting the same or better results with much less data. Maybe they won’t need your book after all.

Meanwhile, so far I’ve been talking about what goes into the model - what about the things that come out? What if I use an engine trained on the last 50 years of music to make something that sounds entirely new and original? No-one should be under the delusion that this won’t happen. Having suggested lots of things where I don’t think we know the answers, there is one thing that does seem entirely clear to me: these things are tools, and you can use a tool to make art or to make cat pictures. I can buy the same camera as Cartier-Bresson, and I can press the button and make a picture without being able to draw or paint, but that’s not what makes the artist - photography is about where you point the camera, what image you see and which you choose. No-one claims a machine made the image.. Equally, I can press ‘Go’ on Midjourney or ChatGPT without any skill at all, but getting to good is just as hard. Right now they’re at the Daguerreotype stage, but people will use these to make art, not because we lack the skill, but because we’re not artists. .

The more interesting problem, perhaps, might be that Spotify already has huge numbers of ‘white noise’ tracks and similar, gaming the recommendation algorithm and getting the same payout per play as Taylor Swift or the Rolling Stones. If we really can make ‘music in the style of the last decade’s hits,’ how much of that will there be, and how will we wade through it? How will we find the good tuff, and how will we define that? Will we care?

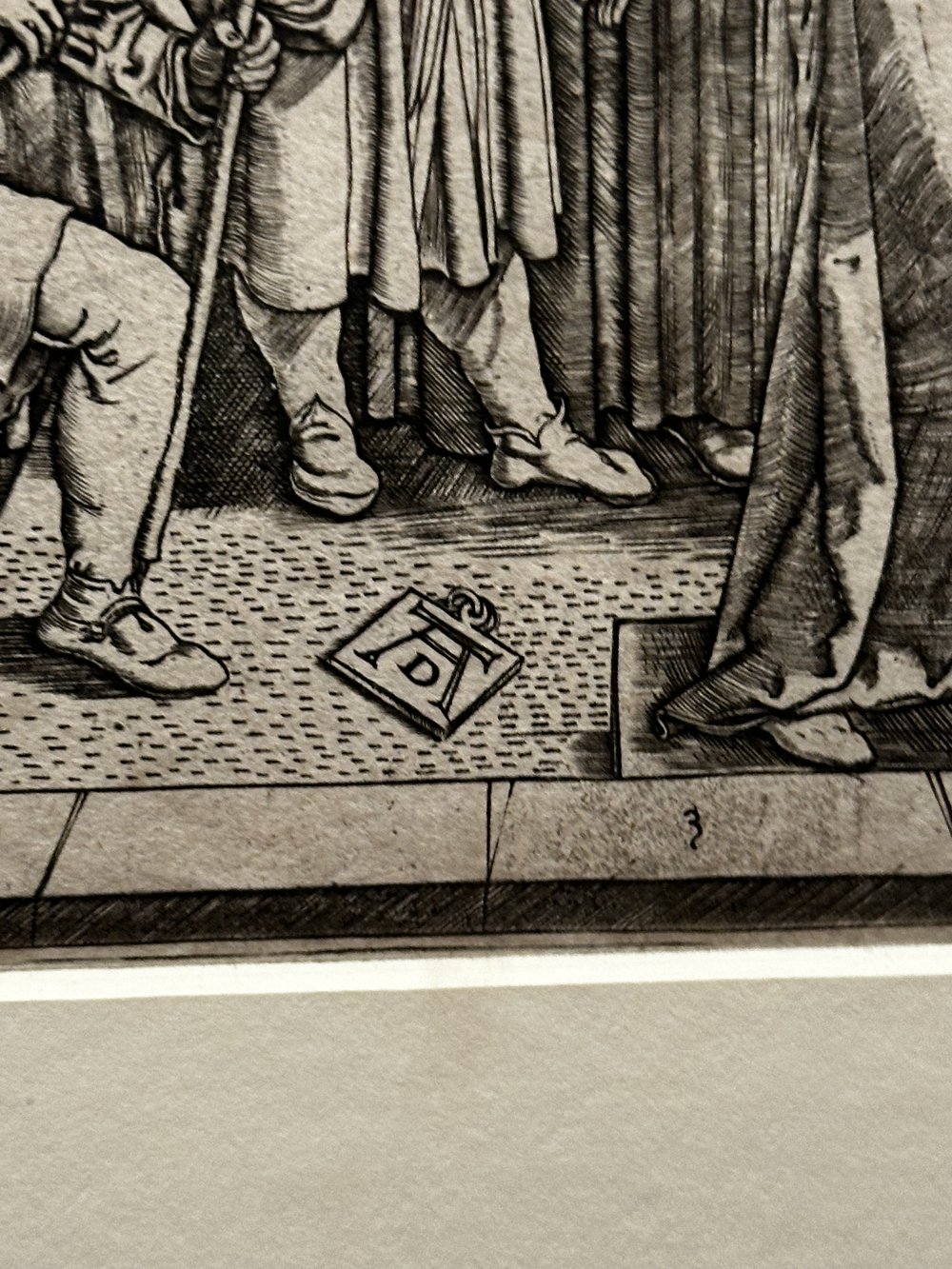

A few weeks ago, in an art gallery in London, I saw a Durer print that wasn’t a Durer print - it was a copy, made in around 1506 by Raimondi, a student of Raphael. Vasari tells us that Durer was furious and went to court in Venice. I treasure the idea of Venetian magistrates trying to work out how to think about this: their verdict was that Raimondi could carry on making the copies, but could no longer include Durer’s logo. That was a case about intellectual property, but the verdict is also a neat split between two ideas of authenticity. Do we care who made it, and why, or do we just want the picture? That's why some people are horrified by music generators or Midjourney, (or, 150 years ago, were horrified by cameras), and others aren't worried at all.