The productivity impact of AI coding tools The productivity impact of AI coding tools

Length: • 25 mins

Annotated by omar

Some content could not be imported from the original document. View content ↗

How much of a productivity boost do GitHub Copilot and ChatGPT give software engineers? Results from a survey with over 170 respondents, and a look into what to expect from AI coding tools.

Apr 18, 2023

∙ Paid

A month ago, I asked developers and others working with AI coding tools like GitHub Copilot and OpenAI’s ChatGPT to share their experiences, so far. More than 170 respondents have provided personal insights and in this issue, we dig into this expert feedback, and I offer my thoughts, too.

Today, we cover:

- The survey. An overview of questions and the profiles of respondents.

- Comparable productivity gains. The gains which GitHub Copilot and ChatGPT offer are enticing, but not totally unprecedented within tech. Experienced engineers with decades in the business share examples of previous comparable productivity improvements.

- GitHub Copilot. Its most common use cases, where the biggest gains are to be found, and when this tool isn’t so useful.

- ChatGPT. Most common use cases and when to proceed with caution.

- Copilot vs ChatGPT. How the tools compare. When is one better than the other?

- The present and future of AI coding tools. Common observations and interesting predictions from survey respondents.

- Copilot and ChatGPT alternatives. There are a growing number of AI tools; a list of other popular, promising options.

- “Are AI coding tools going to take my job?” A very common question and source of concern for some engineers.

1. The survey

In the survey, I asked these questions:

- What AI coding tool have you been using recently?

- How long have you been using the tool for?

- How have you been using the tool?

- How would you summarize your view of the tool in one sentence?

- Does this tool make you more efficient?

- What areas have you seen the most productivity gains?

- What is a tool that has comparable productivity gains?

- What are areas where this tool is not that helpful?

- What types of work is this tool the most helpful for?

- Any other comments?

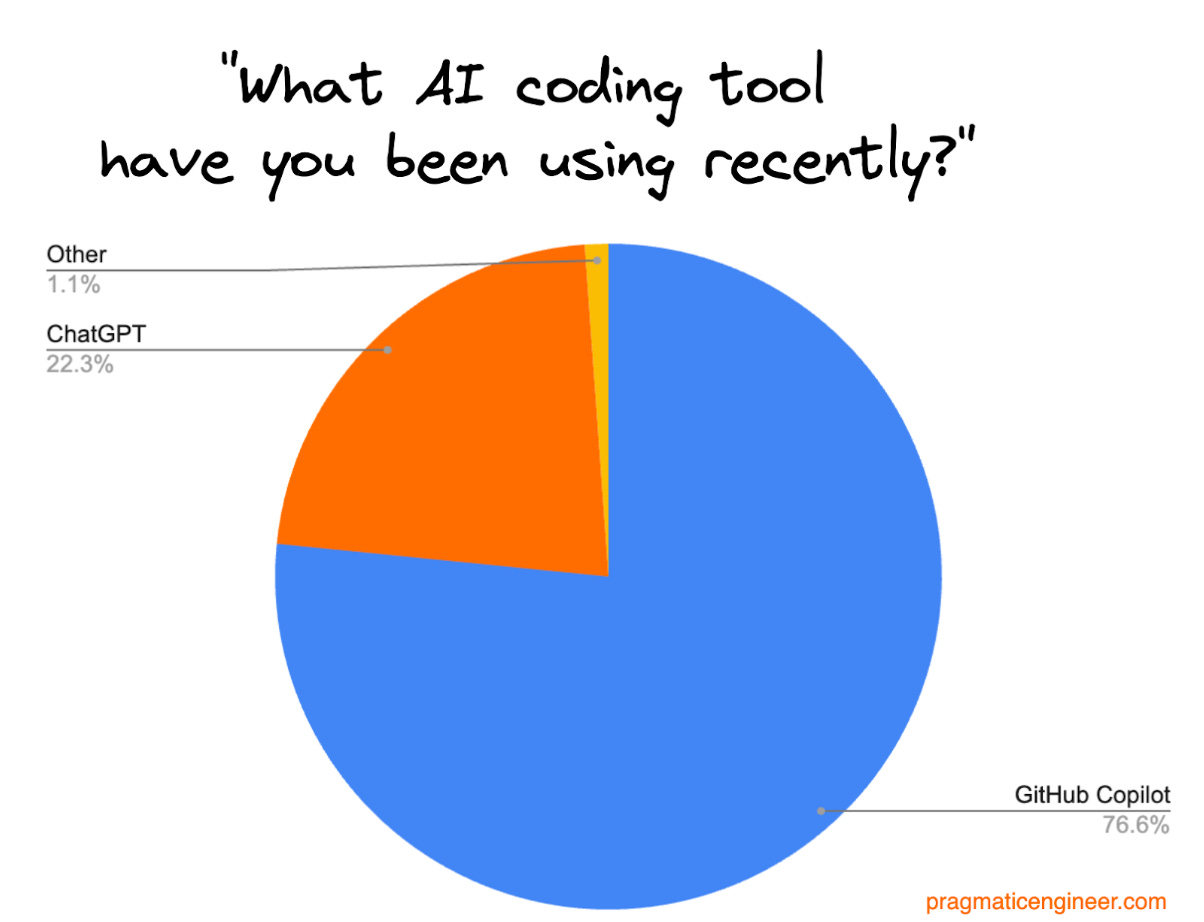

In total, 175 people responded with answers which I’ve analyzed. Of this number, 134 say they’ve been using GitHub Copilot, 39 say they use ChatGPT, and 2 responses mention other tools – including someone who uses Raycast AI together with Copilot:

Going through the responses, many people who mark “Copilot” as their answer, also used ChatGPT, meaning the separation is not so black-and-white as the above chart suggests.

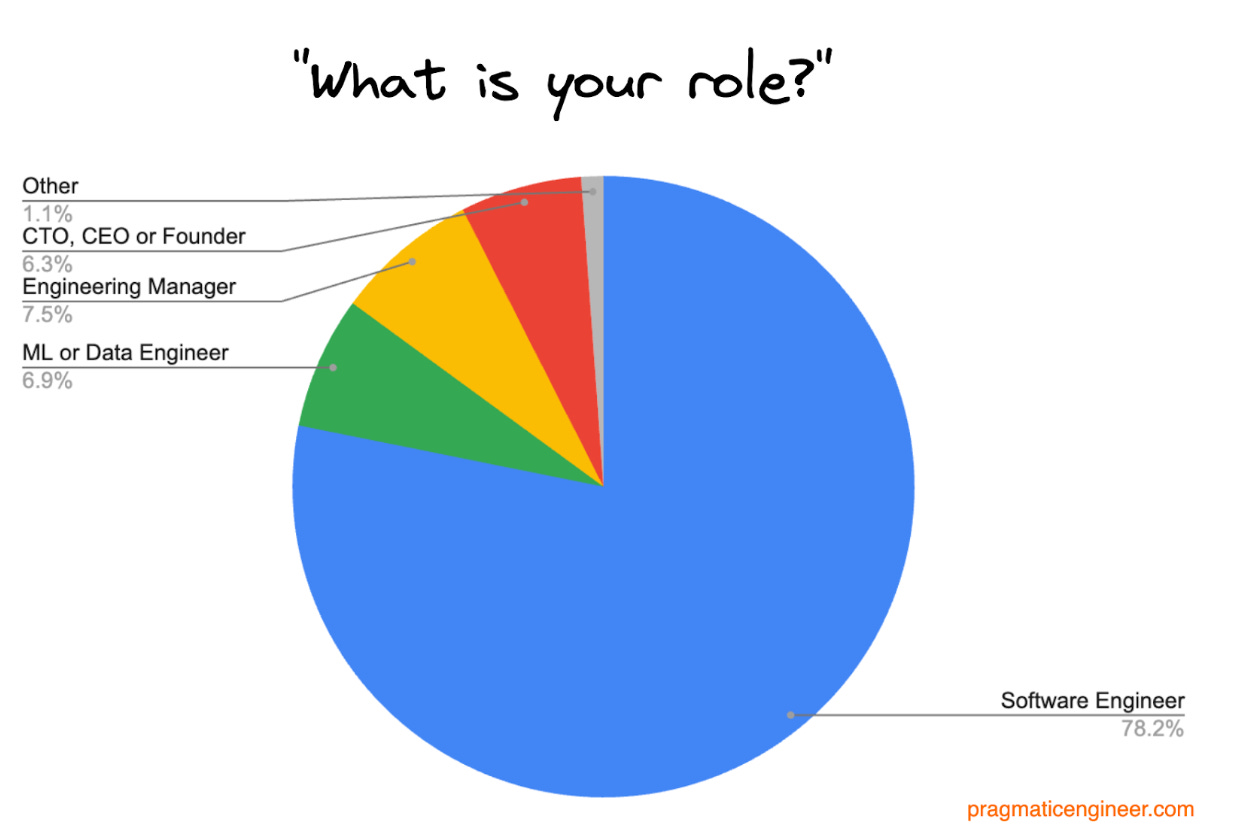

By role split, the majority of respondents are software engineers:

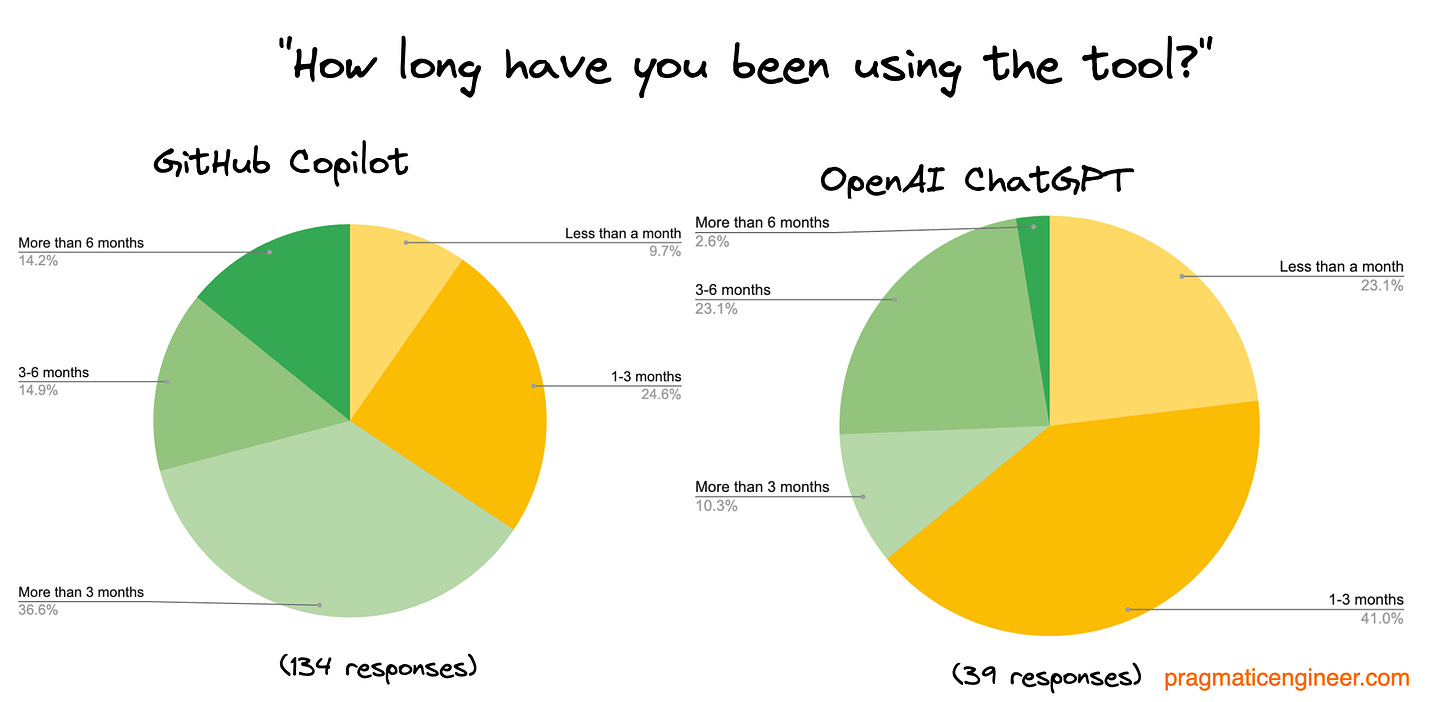

How long have people been using Copilot or ChatGPT? Over half of respondents say more than 3 months:

Despite the hype around ChatGPT right now, it should be little surprise that usage of it is lower on average than GitHub Copilot, as ChatGPT was publicly released in November 2022, nearly a year after GitHub Copilot, which has been available since October 2021.

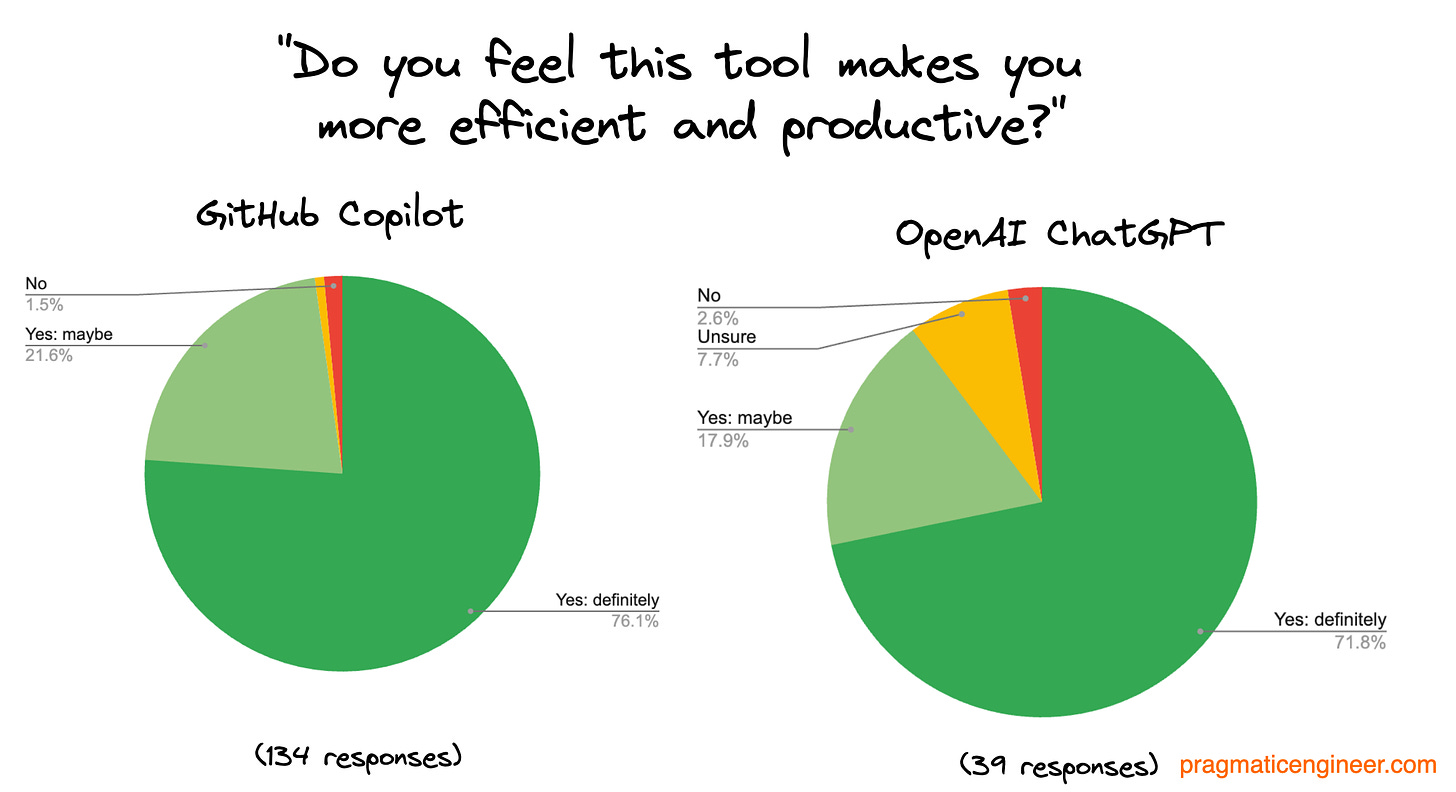

What about perception of efficiency gains from using these tools? It’s quite positive:

You can browse the 🔒 raw data of all responses here.

2. Comparable productivity gains

Tech workers who’ve been using Copilot or ChatGPT overwhelmingly say the tools do bring productivity gains. So we definitely are seeing a “jump” in developer productivity. But is this increase a new phenomenon, or have similar productivity gains occurred before?

I asked this question in the survey, and engineers with substantial experience in the industry cite several occasions when they experienced productivity jumps like with Copilot or ChatGPT.

1. Using a debugger over printing statements (1970s) One respondent compared the productivity gain of AI coding tools to using a debugger for the first time. Before then, applications were debugged by printing statements to the console log.

2. Usenet groups (1980s) Cloud Solution architect Neil Mackenzie shared how back in the day, print books took ages to arrive and Usenet groups were a big source of support when developing.

3. The World Wide Web (1989) Pre-internet, most software engineers learned coding from books and each other. The arrival of the world wide web was a true game changer. Software engineer Michael Bushe shared that in around 1992 his employer General Electric blocked developers from using the web, so they could not take advantage of communicating on this medium. He said that back then, developers knew each other’s specialisms by the stack of books on their desks.

4. MSDN quarterly CDs (1992) In 1992, Microsoft launched the Microsoft Developer Network. It shipped CD ROMs to subscribers quarterly, containing the latest documentation on Microsoft APIs and libraries, technical articles and programming information. A lot of this content was not available online, and so developers building Microsoft software relied on them for up-to-date documentation. Among all the listed productivity improvements, this feels the least significant. Still, it’s a nice reminder that compact discs were a small productivity boost in the 1990s!

5. Google (1998) A software engineer who’s been coding since before Google launched in 1998, shared that ChatGPT’s boost feels similar to what Google provided when it launched and people could discover programming-related answers online.

I wasn’t coding pre-Google and started at my first workplace in 2008, where colleagues often reminisced about when you couldn’t Google answers and had to rely on books, or knowing which websites to visit. While search engines existed before Google – like Altavista or Ask Jeeves – Google was much better at locating relevant niche content like programming topics.

6. Modern IDEs (1997) Several people mention the introduction of the modern integrated developer environment (IDE,) with tools like Visual Studio (released 1997) or IntelliJ (released 2001) which made a major difference to coding after simple text editors like Notepad++.

Early versions of IDEs had capabilities like basic autocomplete and one-click commands to build, test or run programs.

7. ReSharper (2004) Several respondents say that ReSharper by Jetbrains provided a significant productivity boost akin to AI coding tools today. ReSharper was a suite of intelligent application development tools, including functionality like:

- Refactoring support with features like renaming variables/functions/classes, changing method signature, extracting methods, introducing variables

- Enhanced navigation to jump around the code

- Advanced autocomplete

8. Scaffolding frameworks and tools (2005) Backend engineers note they experienced similar productivity improvements from onboarding to frameworks like Ruby on Rails (2005) or Laravel (2011). These frameworks could generate boilerplate code for app scaffolds and the testing of structures, with just a few commands.

9. Web developer tools (2006) Previously, developing on the web was painful, especially when it came to debugging JavaScript code or UI layout. Firebug in 2006 was a major breakthrough in improving frontend engineers’ productivity. In 2008, Google launched “Developer Tools” and the company continues investing in these tools which most frontend and fullstack engineers rely on.

10. StackOverflow (2008) Near the end of the noughties, searching for programming questions online felt broken. Google returned plenty of results for a query, but few solutions. I recall one site called “Experts Exchange” showed up frequently among the top search results, but with the correct answer locked, visible only to paying members. Worse, the “correct” answer was often wrong.

In 2008, Stack Overflow launched and was an instant success. Engineers could ask questions and usually get high-quality answers, sometimes within hours. Over time, these high-quality answers became easy to find via Google, making it simple to find answers to common programming questions.

11. Smartphones (2007) One respondent said the leap AI coding tools offer feels similar to when they could first search topics on a mobile phone, instead of having to be at home on their desktop computer, or finding a library with internet access.

Interestingly, nobody has compared Al coding tools’ productivity gains with any tool or approach released in the 2010s.

12. Jupyter notebooks (2011) Simon Willison, co-creator of the Django web framework, tells me that Jupyter Notebook for Python development – a web-based interactive computing platform – felt like a comparable productivity boost.

13. Tabnine (2019) Tabnine is an AI coding assistant similar to Copilot, which was first released in 2019 using the GPT-2 OpenAI model. Several people say Tabnine offered a similar productivity boost to Copilot.

14. “No comparison.” Some respondents say they’ve never seen a productivity jump like this, including a former software engineer – now an engineering director – who shares that they’ve been in the industry for 30 years, and haven’t experienced any technology which generated gains on this scale.

My take is that there have been plenty of technological “jumps” in productivity:

- Information access: driven by the spread of the internet, better search, and dedicated programming Q&A sites

- Developer tooling: debuggers, IDEs, and specialized debugging and developer tools for areas like web or mobile development

We are likely seeing another category of productivity improvements, and in ways we’ve not experienced before: a “smart autocomplete” and a “virtual coding assistant” which build on information access and improved developer tooling.

3. GitHub Copilot

GitHub Copilot uses the OpenAI Codex released in August 2021. Codex is a descendant of OpenAI’s GPT-3 model and is supposedly less capable than the later GPT-3.5 and GPT-4 models.

The common sentiment among respondents about Copilot is that it increases engineering productivity in almost all cases. It’s most useful for autocomplete, writing tests, generating boilerplate code, and simple tasks. Several engineers refer to Copilot as a “fancy autocomplete” or “autocomplete on steroids.”

However, Copilot can (and does!) produce incorrect code. When using Copilot, you need to assume it produces errors and that you should verify the code. The consensus is that Copilot isn’t very useful for complex tasks, or when working in niche domains.

A few quotes from respondents summarizing sentiment about Copilot:

- "A useful tool that isn't always correct, but it is correct enough to be useful."

- “A really powerful tool in the hands of experienced engineers who have a lot of foundational knowledge, and are starting to code in a new language which they don’t know so well."

- "Good for writing boring scaffolding, but only when you know the language or framework well."

- “It's my third hand. Many small tasks are easily done in Copilot.“

- "I don’t see it replacing seasoned engineers anytime soon, but those who adopt it will output vast amounts more."

How engineers use Copilot

The most-mentioned use cases:

- Scaffolding. “I have been using it mainly to get basic structure ready without typing it all out by myself. Helps me to do my task in a very short amount of time in most cases.”

- Boilerplate code. “Integrating with a very inconsistent SOAP service is an example of when I would go mad if I had to type it all out. Copilot is able to use bits of notes I have in IDE to generate reasonably-looking skeletons while adjusting types/names/literals.”

- Autocomplete. "I have it always on, suggesting autocomplete in the IDE."

- Generating tests and documentation. "I use it for everything: writing code, writing tests, writing documentation" and "generation of (block) comments, generation of tests, documentation."

- Generating repetitive code. "It generates a lot of code I would type anyway. It speeds up my work."

The biggest productivity gains

The biggest cited productivity gains of Copilot:

- Refactoring. One of the most-mentioned areas, and an area in which Copilot shines.

- Writing tests. “When writing the test, the autocomplete is accurate in tests and most of the time the result is ready to use as is, or needs only minor changes.”

- Common tasks. This includes writing a network request or sql query.

- Autocomplete. An even more powerful code completion aid.

- Boilerplate. Generating boilerplate code and skeleton code.

- Unfamiliar domains. Helpful when working with unfamiliar languages and frameworks.

- “Accelerate” into tasks. “I've found Copilot allows me to 'accelerate' into a task more quickly. There's a period when starting a task where you're familiarizing yourself with the domain, the codebase, and the problem space. Copilot is like a jump start that lets you get into the meat of the problem quickly. I'm now able to spend far more time crafting the product behaviors, be that API interface, UI, edge case handling, or more.“

When Copilot is not so useful

What are areas in which Copilot is not that helpful? Commonly mentioned areas by survey respondents:

- Complex code or logic. Copilot sometimes fails to understand more complex queries. An engineer said they often disable Copilot when writing more complex business logic so as not to be distracted by the often incorrect code it tries to guess. Also, Copilot can generate complex code that’s hard to debug.

- Generating complete functions. Many people mention being unhappy with the results of letting Copilot generate a whole function.

- Non-trivial APIs. For APIs which are tricky to use – like JavaScript dates and time zones – Copilot can mess up things and debugging the results is frustrating.

- When developing in an unfamiliar language. Because Copilot sometimes suggests incorrect code, it can be high-risk to totally rely on it when you’re not well-versed in a language.

- Having Copilot always on can get overwhelming. Copilot is always suggesting code, even when it’s not needed. This level of interaction can become tiresome and distracting.

- Errors in generated code. A common complaint is that Copilot generates errors which are sometimes hard to spot.

A technical cofounder sums up well when not to use Copilot:

“If I don’t know exactly what I want or I cannot accurately explain what I want, the AI tool will, of course, struggle to do what I need it to.”

4. ChatGPT

The common sentiment on ChatGPT is that it’s a useful tool which speeds up the learning of new languages and frameworks, but that it can and does get things wrong. This means you need to learn how to use it, and how to “prompt it right.”

How engineers use ChatGPT

A few quotes from respondents summarizing ChatGPT for coding:

- "It’s like having an expert engineer with you to ask questions; it doesn’t know everything, isn’t always right, but massively accelerates learning and gets you going in the right direction."

- "It's an assistant to my work and a guide to the knowledge I need to research."

- "We use it as a digital co-worker, a L1 SWE that helps L2/L3 engineers and a PM."

- "It's very helpful and I believe it will evolve to be more accurate when giving suggestions."

One of my favorite summaries is from a software engineer who says their usage of ChatGPT makes it like an “interactive rubber duck:”

“ChatGPT mostly speeds up coding, but sometimes it shows alternatives, makes corrections, suggestions and gives hints that are mind-opening. It also motivates you as well because you have someone to talk to, like it was a ‘rubber duck.’"

The biggest productivity gains

The most common cited productivity gains with ChatGPT:

- Learning: new concepts, tools and frameworks and researching. Interestingly, the use case of learning unfamiliar topics was mentioned most frequently about ChatGPT. Researching new topics is also a common use case.

- Routine, boring tasks: for example, SQL or Elastic queries and understanding what JSON responses mean.

- Getting started: kicking off new projects and tasks, and overcoming initial barriers. As one respondent shared: “it breaks the initial barrier of ‘where to begin?’” Generating code for greenfield tasks or projects is also a common use case.

- Improving code quality: by asking ChatGPT to do this, or asking it to criticize the code. Another use case is to input code and ask ChatGPT to refactor it.

- Prototyping: several engineers mention they use ChatGPT to throw together a prototype quickly.

- Debugging: One use case is to give ChatGPT some code and ask it why that piece of code isn’t behaving as expected.

When ChatGPT is not so useful

- Hallucinating non-existent frameworks or libraries. Several engineers mention that ChatGPT hallucinates things like an API within a small JavaScript library that doesn’t exist. It also told another respondent that SQLite has a just-in-time (JIT) compiler, when it does not. The lesson: treat all suggestions from this tool with caution and assume it will sometimes make things up.

- Out-of-date knowledge. When using modern frameworks, ChatGPT will not be up-to-date with the latest API changes or language features. This is visible on frameworks which recently evolved a lot, like SwiftUI.

- Not great for specialized frameworks. Several people mentioned lackluster performance when working with specialized, niche frameworks, which ChatGPT has likely been fed less training data about. This is very relevant for closed source proprietary frameworks, or specialized fields like audio processing, and SRE tasks in the computer vision field.

- Complex tasks. When ChatGPT’s asked to generate larger pieces of code, or code spanning several files, the output tends to have more bugs and it can feel like ChatGPT is confused by not tracking variable names or sometimes hallucinating APIs.

- Generates “dated” code. Because ChatGPT has been trained on data sets collected during or before 2021, the code it generates includes libraries and APIs up to 2 years behind the state of the art.

- Errors in code generated. Like with Copilot, a complaint about ChatGPT is that it generates code with errors. Personally, it feels like output from both these coding companions should be treated like it comes from an inexperienced engineer; they have breadth of knowledge, but lack context for a given project or task.

5. Copilot vs ChatGPT

So which one is best? Copilot seems to excel in coding tasks, while ChatGPT is more helpful for learning and as a “virtual companion.”

Survey respondents have shared the most productive use cases for each tool, and there’s a clear distinction in where each shines. It’s less surprising that Copilot excels at common coding tasks like tests, refactoring, boilerplating, etc. What I find more interesting is how well ChatGPT works as a companion that can teach, give feedback, and even offer ideas when debugging.

As Copilot and ChatGPT have different use cases, the most productive engineers are likely using both tools to each one’s strengths.

‘Autocomplete’ vs ‘learning and code review.’ A staff software engineer who’s been using CoPilot and ChatGPT for 3-6 months, summarizes the differences like this:

“I use ChatGPT to learn idioms in new languages, as code review, and as a mentor. With Copilot, I use autocomplete for familiar tasks I have strong subject knowledge in. I disable Copilot when I work in unfamiliar territory.”

Another software engineer describes the difference between the tools as knowing what question to ask, vs having a stricter dictionary.

“I've found Copilot to pair especially well with domain driven development (DDD.) Part of being effective with ChatGPT is knowing the right question to ask. Having a stricter lexicon with which to describe your software creates a positive feedback loop between prompt and code.”

ChatGPT-4 feels a lot more advanced in its capabilities than the Codex model that powers GitHub Copilot. Because of this difference in output quality, an “apples to apples” comparison of the two tools is hard. I’m looking forward to seeing how Copilot improves once the engine is upgraded from the current Codex version.

6. The present and future of AI coding tools

What are common observations and interesting predictions by respondents? Here’s a few I’ve collated:

The present

Today, you need to be skeptical and verify the responses. Both Copilot and ChatGPT generate incorrect code and it’s foolish to assume they wouldn’t. As one software engineer puts it:

“I cannot stress this enough, it only speeds you up if you know what you want to write, and you use it as a boilerplate generator. If you are trusting the implementations generated by these tools, you are in big trouble.”

Using AI coding tools makes work enjoyable. Several engineers mention that using Copilot or ChatGPT means they spend less time on the “boring” parts of the job, and more on the interesting stuff.

“Copilot helps mostly with coding and it helps that I have to do less of the boring stuff. I hope it improves to give even more precise and useful suggestions. But it already works better than 5 months ago!” – a frontend developer

“I don't know if Copilot speeds up the work overall, but it makes me enjoy writing code a lot more.” – a software engineer

“These tools only speed up the actual implementation or coding phase. Since most of my time is already spent thinking and designing the abstraction, the total amount of time saved is marginal. However, since the actual typing of the implementation is the most boring part, I found that my work is a little more enjoyable. I would not call them a “must have,” not yet.” – a frontend developer

AI coding tools are already very useful for experienced engineers. Many respondents emphasize that they feel Copilot and ChatGPT are both already very useful for experienced engineers:

“I believe this generation of AI tools is very useful for senior engineers because they can be aware of inefficient code, or spot bugs generated by the AI” – a senior software engineer

“Definitely speeds up work, as long as you're familiar with that context/ language/ technology. Otherwise it puts you in a bad state” – a tech lead

“I am a senior+ engineer, so I tend to validate everything I see. This means no bugs introduced by Copilot. I treat its output like pair programming with a robot”

An advantage in take-home interviews. A use case for AI coding tools that’s already practical is completing take-home coding exercises faster:

“They speed up the boring bits of coding for me. I used them to do a take home, which was nice and probably a must have, considering everyone seems to pour a lot more time into take homes than is stipulated.“ – an ML engineer

The future

AI coding tools will become a standard fixture of software development, say plenty of respondents:

“‘Must have’” is hard to say – technically an IDE is not a ‘must have’ but it's pretty standard now. I expect this will be the case for AI coding tools in future.” – a software engineer

“I'm sure it will be part of every IDE in one way or another. It saves keystrokes significantly, but it could be hard for juniors to spot issues or filter out garbage.” – a senior software engineer

“Not sure if it will be a ‘must have’, but I believe they'll be like modern IDEs: a given. I'd like to say one could work without them, but when I tried doing without it, I quite missed it.” – a software engineer

“I think Copilot will be like Autocomplete, not having it will be a big disadvantage.” – a senior backend developer

Personally, I think AI coding tools will become part of the development workflow across the industry, similar to how using IDEs or CI/CD is a given at most places.

“Learn how to use them or get left behind.” With the productivity gains clear, and the likelihood that AI coding tools will become a standard part of the development toolkit, professionals who don’t embrace this tech risk being left behind. Some views from the survey:

“These tools will be a must have and any engineer who does not use them will simply be unproductive and considered unresourceful, akin to an engineer that does not use Google because they believe that a good engineer must be able to work independently.” – a CTO

“I think people who dismiss these tools are/will be missing out. Not just for coding, but for writing, all kinds of stuff. The interactive single interface for knowledge acquisition is so convenient/fast.” – an ML engineer

“Any org or coder not using it will fall behind. If you reject these technologies you are an arrogant child” – a software engineer

AI tools will likely help generalists more, at least initially. An interesting observation from a senior software engineer:

“I think it is a must have for engineers who are more like generalists and work with different programming languages, or work on different layers of development, like database, message queues, backend to frontend. Probably, it won’t help specialists too much who have a deep understanding of one area, like performance optimizations.”

AI coding tools will only get better. We’ve seen a major leap with Copilot and ChatGPT and we can only expect further improvements. From respondents:

“In about a year of using Copilot, it improved so much so that I expect it to become better and better in the next few years”

“I think AI coding tools will get better and become more useful. But adding guide rails around these products is going to become essential”

“Copilot definitely improves efficiency and reduces your cognitive load. I would like to see it become more accurate in what it generates.” – a computer science grad student.

This is the hype phase. Whenever I bump into software engineers, product folks or engineering managers, after a few minutes we always end up talking about ChatGPT, LLMs, Copilot and the impact they’ll have on software engineering. There’s a pull to get involved and the FOMO (fear of missing out) is real. This is typical of a hype phase and it’s not just me; several respondents articulate the same feeling.

It’s hard to tell how much AI coding tools will live up to the hype. Perhaps our current expectations are unrealistically high. Then again, ChatGPT and other AI tools – like generative image generators – have lived up to the hype, so perhaps it’s warranted.

A few more predictions:

- Less trust in contactor work. With AI coding tools already close to being mainstream, it’s safe to assume contractors or remote workers will use them, even if they don’t care to admit it. In all fairness, I don’t think much will change with contractor relationships; perhaps customers will need to validate and review code more vigilantly than before.

- More AI-generated code will ship to production without human review. While engineers should not blindly trust AI-generated code, it is tempting to assume the code is fine and to skip reviews. The better AI tooling assistants get, the more frequently reviews will be skipped, or be stamped ‘LGTM’ (looks good to me.)

- There will be an impact on tests. A popular use case for Copilot is to generate tests which are tedious to write. This could eventually make tests less useful, as the point of a good testing suite is that the implementation can be changed, as long as well written tests pass. However, if the tests are generated based on implementation, this might lead to a test suite which tests the specifics of the implementation, and not only the intended behavior!

- There will be an impact on coding style. Whatever is the ‘default’ coding style of Copilot will make its way onto a variety of codebases. Whichever AI coding tool becomes market leader, it will shape the ‘default’ coding styles at organizations, especially in greenfield projects or at companies with no coding style guide in place.

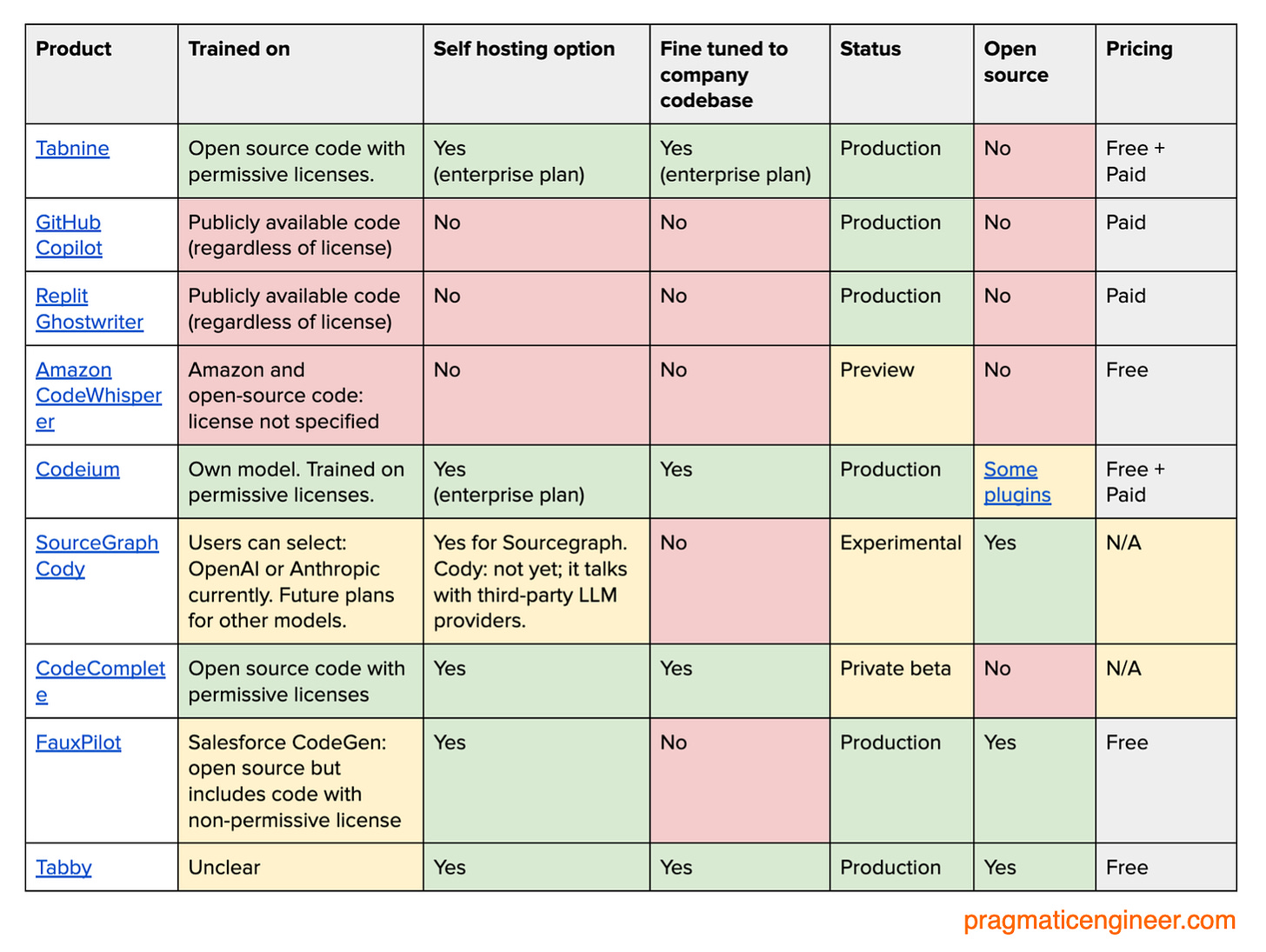

7. Copilot and ChatGPT alternatives

There are plenty of tools to choose from aside from Copilot and ChatGPT. Here are the most promising ones worth checking out, with an emphasis on those with self-hosting as an option. Date of launch is in brackets:

- Tabnine (2019)

- GitHub Copilot (2021)

- Replit Ghostwriter (2022)

- Amazon CodeWhisperer (2022)

- Codeium (2022)

- SourceGraph Cody (2023)

- CodeComplete (2023)

- FauxPilot (2023)

- Tabby (2023)

ChatGPT alternatives

One important thing to note about ChatGPT is that, by default, it uses your input via the web interface to train its model. This is how Samsung employees leaked confidential data by asking ChatGPT to generate meeting notes. ChatGPT retains user data, even that of paying users. If you don’t consent to this, you need to opt out.

Below are a couple of ChatGPT alternatives which do not “leak” data like it does, as in making user-entered data part of a training set which can later be accessed by all customers:

- OpenAI APIs. Curiously, ChatGPT uses data entered via its web interface for training, but not when using its APIs. So an obvious workaround is to use the APIs with a wrapper, like the open source Chatbot UI.

- Azure OpenAI Service. Fine-tune custom AI models with your company data and hyperparameters.

- MosaicML. Train large AI models with your company data in a secure environment. Point to an AWS S3 bucket, and that’s it!

- Glean. AI-powered workplace search across the company’s apps, powered by deep learning-based large language models (LLM.)

- Aleph Alpha. A company emphasizing that it’s a European AI technology company, which has open sourced its code codebase and doesn’t use customer data to train models.

- Cohere. A set of LLMs to generate text, summarize it, classify and retrieve it.

- Writer. A generative AI platform that trains on the company’s data.

Building your own company model instead of using a centralized LLM provider is another alternative, and this approach could be prudent for businesses conscious about not passing sensitive and proprietary data to vendors. Databricks created Dolly, a cheap-to-build LLM which works pretty decently compared to ChatGPT, although the model is more dated. They also open sourced 15,000 records of training data. Read more about Dolly.

A very relevant question in the coming age of LLMs will be “buy, build, or self host?” This is because the usefulness of LLMs depends on two things: the model, and the additional training data. Companies will want to train LLMs using their in-house data to make them most useful for staff. However, trusting a vendor with in-house data is more risky than building and operating an LLM in-house, or self hosting one. But there’s considerable cost in both self-hosting, and especially in building one. Still, it’s early days, so perhaps experimentation like this makes sense?

It feels to me that ChatGPT alternatives are an incredibly hot topic and we’ve likely only skimmed the surface of startups working in this area. For example, there are more than 50 LLMs with 1B+ parameters that can be accessed via open-source checkpoints or proprietary APIs. Here’s a list of them, collated by software engineer Matt Rickard.

8. “Are AI coding tools going to take my job?”

Let’s close with the proverbial elephant in the room: are software engineering jobs at risk from Copilot, ChatGPT and other AI tools? Firstly, here’s what respondents say.

Coding is just one part of being a software engineer. Several people point out that the current tools may speed up coding, but there’s plenty more that engineers do.

“Coding is just one part of software engineer work. I don't see AI as a replacement for software engineers in the foreseeable future.” – a software engineer

“It speeds up coding, for sure. Architectural design or any abstraction level above simple functions, not so much. I'd love to have more AI: the less time I have to spend on syntax, the more time I have to actually program. Ideally, AI provides a higher abstraction layer like fourth-generation languages are doing relative to machine code.” – a freelance software developer

“The value-add part of our job is NOT writing the code, but actually understanding what has to be done, choosing an approach, and navigating the tradeoffs. Programming competence, of course, is needed because you must understand and double check everything the tool generates. We can’t forget that AI-generated code is different from project boilerplate generators or scaffolders: machine learning (ML) results are probabilistic, not deterministic.” – a software developer and researcher

It will automate a lot of other tasks before it can ‘automate away’ coding. There are many types of work which LLMs can automate; coding is not the first item on that list. From a ML engineer:

“I think these tools will be very helpful in office work: emails, simple spreadsheets, note-taking, conversation summaries. In this, I think they will help users who are less tech-savvy to do a lot of things with simple code, as well as automate away some of these chores.”

It’s still just a tool. I liked how a DevOps engineer puts it: “a fool with a tool is still a fool:”

“It’s a great tool, but “a fool with a tool is still a fool” still applies. You need to keep your hands on the steering wheel and take the lead. I have seen it suggest BS and produce inconsistent code. Still, it makes coding more fun and I have learned new ways to solve problems. Awesome stuff. The future is here!”

It will embrace the “Matthew principle.” The Matthew principle describes how ‘the rich get richer and the poor get poorer.’ A senior software engineer thinks that AI coding tools will result in exactly this:

“I think this will be another instance of the Matthew principle. Those that have the right predisposition will get better, learn faster and be more productive. Those that understand how the tool works will be better positioned to apply appropriate caution. It’s almost useless for everyone else and in fact, dangerous, since the tone of responses is quite authoritative.

In general I think this will just exacerbate all existing problems with software engineering, of lots of bad code being written faster and lots of half-baked products created faster. The biggest gain accrues to those who are already experts or already have some kind of advantage: e.g. cultural, experience, education level and so on.”

My take is that with the recent leap in AI – large language models and generative AI – we are seeing a leap that brings yet another productivity improvement to software engineering. However, this change goes beyond the improvements of debuggers, or better developer tools, which only benefited software engineers.

I expect AI to have a similar impact as the world wide web: improving the efficiency of software engineering and having broader, wider impacts that are hard to predict precisely. However, I am pretty confident about a few things:

- Get onboard and master this new tool. As with the dawn of the world wide web, there’s the option to keep doing things the same way as before, such as by consulting only programming books. As a software engineer you can choose to ignore AI coding tools, but you’ll likely be handicapping yourself.

- Smaller teams will produce more with AI-enhanced tools. Small teams will ship faster, and build more ambitious projects. Teams with mostly junior engineers will still likely get things done quicker, even though I suspect that having at least one experienced engineer will help with debugging the inevitable errors AI coding tools generate, and ensuring the code shipped remains maintainable.

- The lean, fast startup will return. We already see this happening with AI startups. Midjourney is a 10-person startup. OpenAI with barely 300 engineers is running rings around Google, which has an army of thousands doing AI work.

- We’ll see a new generation of ‘junior’ engineers. There will be a generation of early-career engineers who make the most of AI-assisted coding tools to get things done quicker, learn faster, and potentially be more productive than some more experienced engineers. This is similar to what happens with every technological leap: those who master the tools early, reap the additional benefits and grow faster.

- Senior engineers who figure out how to use these tools well will also improve their productivity. Simon Willison is the creator of Django and a very productive programmer. He shared how he estimates Copilot gives him at least a 20% productivity boost in time spent writing code – which is material. More importantly, he’s shared how AI-enhanced development makes him more ambitious with his projects. For me, Simon is an example of a senior engineer embracing this new technology and further leveling up his already high productivity.

Takeaways

We’re in the middle of a technology inflection: AI coding tools are improving productivity in ways most of us haven’t seen in a decade or more; at least not since modern IDEs with autocomplete and advanced developer tools went mainstream.

To me, the surprising thing is how quickly this change has happened: GitHub Copilot was launched in October 2021 as technical preview and exited that stage in the summer of 2022, while ChatGPT 3.5 launched in November 2022, and ChatGPT 4 a month ago, on 14 March.

I see this phase as the perfect time to experiment and play around with AI coding tools. Because this area is so new, nobody has it all figured out and things are changing rapidly. And we could well see more changes with the release of new advanced models and services wrapping these models. It’s not just vendors releasing models and services: some larger companies are self hosting open source models, and a couple of tech companies are building their own, like Google (LaMDA) or Meta (LLaMA).

While sourcing alternatives to Copilot and ChatGPT for this article, I came across dozens of companies founded less than 6 months ago, many of which have not publicly launched a product.

AI coding tools will, yet again, move the industry forward, and we have front-row tickets for the show. We can choose to spectate and try out some tools, and we can also participate and contribute to some of these tools, get involved with startups building them – or even found a company doing precisely this.

Every big shift brings change and change creates winners and losers. Those who lose out are frequently people and organizations which refuse to acknowledge the change taking place, while winners make the most of the change and opportunities which new technology offers. I like to think of change as an opportunity: get involved early and figure out how to become more productive and more ambitious with AI-enhanced development, like Simon Willison does.

The future is happening, and it’s a very exciting time to be in the industry!

Recommend The Pragmatic Engineer to the readers of OmarKnows

Big Tech and high-growth startups, from the inside. Highly relevant for software engineers and managers, useful for those working in tech.

Recommend The Pragmatic Engineer

Big Tech and high-growth startups, from the inside. Highly relevant for software engineers and managers, useful for those working in tech.

By Gergely Orosz · Tens of thousands of paid subscribers

Why are you recommending The Pragmatic Engineer? (optional)

Recommendations are shown to readers after they subscribe to your publication. Learn more