Language is our latent space - by Jon Evans Language is our latent space

Length: • 8 mins

Annotated by Patrick

And latent space is Plato's Cave

6 hr ago

Jungle Snooker

For the first time in a very long time, the tech industry has discovered an entirely new and unknown land mass, terra incognito we call “AI.” It may be a curious but ultimately fairly barren island ... or a continent larger than our own. We don't know. But signs currently point to enormity, a treasure trove of undiscovered territory and denizens. That's why everyone is so excited/terrified.

That said, there’s still a huge and mysterious gap between discovering strange behavior in this unexplored new land ... and discovering, well, life.

Consider language models, aka LLMs, such as ChatGPT and GPT-4 and LLaMA. We feed them words, and they embed those words, mapping them into N-dimensional space so that similar words/concepts are closer together. (I've written about that process in more technical detail here, simplifying N to 3 to visualize it.) That map of embedded language is called “latent space.”

One of the many semi-miraculous things about LLMs is that such a language map will spontaneously emerge if/when a neural network is “trained to predict the context in which a given word appears.” (I've written about how networks are trained here.) Furthermore, transformers — the architecture with which ~all LLMs are built — don't merely learn how to map words; their “attention heads” also learn higher-order language structure, such as grammar and the typical structure/flow of phrases, sentences, paragraphs, even documents.

Put another way, LLMs construct a map of our language, then learn the structures of recurring patterns in that space. To stretch an analogy, but not so much that it’s wrong, you might think of words embedded in latent space as places on a map, and the discovered grammatical and conceptual patterns as the roads and rivers and pathways which can connect those places. LLMS are not trained to be chatbots. What they are trained to do — because it’s the only thing we know how to train them to do! — is take an existing set of words, the "prompt," and continue it. In other words, given the start of a journey on that language map, they guess how and where that journey is most likely to continue. And they have gotten shockingly good at this.

But it's important to understand this is all “just” math. The only inputs an LLM actually receives are numbers, which it transforms into other numbers. It's humans who convert those numbers to/from language, and then, of course, meaning.

Another analogy, as two combined can be more illuminating than one: consider snooker, the pool-like game won by sinking balls of varying value in the best possible order. Imagine a snooker table the size of Central Park, occupied by thousands of pockets and millions of numbered balls (the numbers 1 through 32000, repeated.) Now imagine that the rules of snooker — i.e. which balls are most profitable to sink — change after every shot, depending on where the cue ball is, which balls have previously been sunk, the phase of the moon, etc.

Call that “Jungle Snooker”, borrowing from Eric Jang's idea of Jungle Basketball. The numbers on the balls represent word embeddings; ‘which balls to aim to sink in which order,’ the patterns in latent space. All we have really taught modern LLMs is how to be extremely (stochastically) good at Jungle Snooker, which doesn’t feel that different, qualitatively, from teaching them how to be extremely good at Go or chess. Now, the results, when converted into words, are phenomenal, often eerie —

— but LLMs still don’t “know” that their numbers represent words. In fact they never see words per se; we actually break language into tokens, word fragments basically like phonemes, number those tokens, and feed those numbers in as inputs. (Tokenization helps deal with compound words, borrowed words, portmanteaus, etc., and generally just leads to better results.) Jungle Snooker is literally the only thing LLMs do. They don’t “understand” what words are, much less the concepts that words refer to.

...So why and how are they so unreasonably effective?

Present-day AI hype isn't, really. Its practitioners seem genuinely astonished that we seem to have unexpectedly vaulted into a new era of spookily good, sometimes astonishing, capabilities. This seems especially true whenever language is involved, even across multiple modes of media (images with captions, videos with transcripts, etc.) Why and how might this be happening?

Sigh looks more and more like all intelligent tasks have a similar underlying structure which means that they are potentially all expressive with condensed language which means

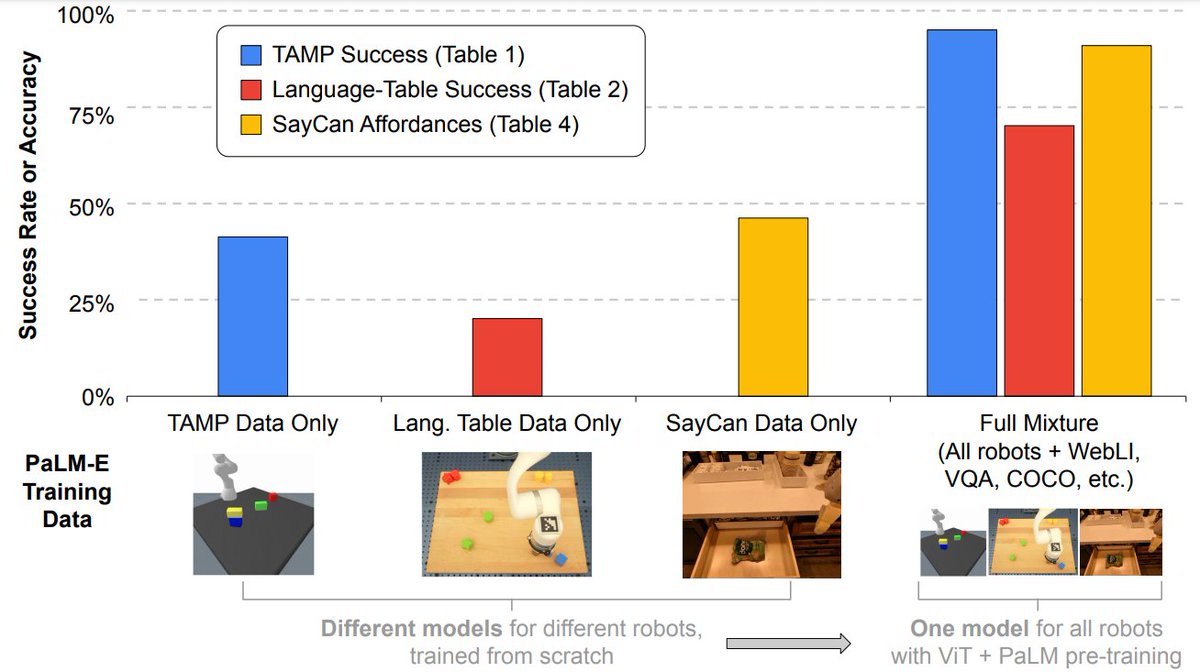

Perhaps most exciting about PaLM-E is **positive transfer**: simultaneously training PaLM-E across several domains, including internet-scale general vision-language tasks, leads to significantly higher performance compared to single-task robot models.

EpisteLLMology

OK, this is where I madly handwave. (As if I wasn't already.) One of the most interesting things about LLMs is what they may imply about how we think. In particular, what LLMs do with numbers is very similar to what we do with language. We hardly notice, because language is water we live in, but words are how we embed meaning, indicate that concepts are juxtaposed or similar or reminiscent, and form our own mental maps of reality. Words are our embeddings, and language is our latent space.

That’s perhaps why smart people are so often fascinated by linguistics, or even the so-called lowest form of humor, the pun; a pun is funny because it's a flawed / dissonant embedding. The unreasonable effectiveness of LLMs, says me, stems from the fact that we have already implicitly encoded a great deal of the world’s complexity, and our understanding of it, into our language -- and LLMs are piggybacking on the dense Kolmogorov complexity of that implicit knowledge.

This is why Samuel Johnson's dictionary was so monumental; it was the first formalization of the English embedding protocol. As any software engineer knows, you need at least semi-consistent protocols for people to build on each other's work ... or, at least, it makes things a whole lot easier. Every dictionary is at least to some extent also an encyclopedia. Eeach is also entirely self-referential, a a set of words all used to define one another. It's the connections between words where meaning lives. Put another way, a dictionary may look like a book that runs in sequential order — but in fact a dictionary is a network ... or, if you will, a set of patterns in latent space.

Not that language is consistent. Nor should it be! (I'm a descriptivist.) But semi-formalized language is consistent enough. It's still super fuzzy, but I would argue that fuzziness is a feature, not a bug -- and, similarly, smart people describe LLMs as "fuzzy processors."

Our latent space, known as language, implicitly encodes an enormous amount of knowledge about the world: concepts, relationships, interactions, constraints. LLM embeddings in turn implicitly include a distilled version of that knowledge. A reason LLMs are so unreasonably effective is that language itself is a machine for understanding, one which, it turns out, includes undocumented and previously unused capabilities — a “capability overhang.”

I concede the above claim is a rare and less-than-optimal combination of a) kind of obvious b) violently handwavey & speculative & non-rigorous. And offhand I can't think of any testable predictions which fall out of it. But it's been nagging at the back of my brain for weeks, so I needed to exorcise it to my Substack. Not least because this notion in turn strongly echoes the most famous thought experiment of all time —

The ALLMegory Of The Cave

You might read the above and think: “aha, so if that’s true, super huge if by the way, that means LLMs are dumb and overhyped, since they get all their propulsive power from us, in the form of language's implicit machinery — they're just repurposing a capability that already exists!"“But I believe this is 100%, 180°, the wrong take.

Some 2500 years ago Plato proposed the Allegory of the Cave, in which he describes

a group of people who have lived chained to the wall of a cave all their lives, facing a blank wall. The people watch shadows projected on the wall from objects passing in front of a fire behind them and give names to these shadows. The shadows are the prisoners' reality, but are not accurate representations of the real world.

That ... seems like a pretty good analogy for an LLM? The numbered tokens fed to it are the shadows it sees. The puppets casting those shadows are language. Training consists of projecting shadows onto the wall until the prisoners learn their patterns -- but their “understanding'“ remains purely mathematical, all they know is shadows on a wall; they have no idea that such things as puppets and caves exist, much less that they're in a cave and a whole world exists beyond.

But to an extent this is how we started too. In Plato’s thought experiment, the shadows

represent the fragment of reality that we can normally perceive through our senses, while the objects under the sun represent the true forms of objects that we can only perceive through reason. Three higher levels exist: the natural sciences; mathematics, geometry, and deductive logic; and the theory of forms.

But before Plato’s time, in a time before language, our human or proto-human ancestors lived in a much starker and bleaker cave yet, unable to share or discuss the “shadows” of their sensory experiences in order to understand them. We could see all the miraculous colors of a rainbow, no hard Sapir-Whorf here -- but without language, we would have had enormous difficulty conveying the concept to a clanmate who hadn't witnessed one. Language is how we broke those shackles, and escaped that cave.

Invert Plato's cave, and imagine yourself as a puppet master, trying to reach out to chained prisoners to whom you can only communicate with shadows. Similarly, right now all we have are machines that we can teach to play Jungle Snooker.

But if we do ever build a machine capable of genuine understanding -- setting aside the question of whether we want to, and noting that people in the field generally think it's “when” not “if” -- it seems likely that language will be our most effective shacklebreaker, just as it was for us. This in turn means today's LLMs are likely to be the crucially important first step down that path..

The question is whether there is any iterative path from Jungle Snooker to Plato's Cave to emergence. Some people think we'll just scale there, and as machines get better at Jungle Snooker, they will naturally develop a facility for abstracting complexity into heuristics, which will breed agency and curiosity and lead to a kind of awareness — or at least behavior which seems like awareness — in the same way that embeddings and latent space spontaneously emerge when you teach LLMs.

Others (including me) suspect that whole new fundamental architectures and/or training techniques will be required. But either way, it seems very likely that language will be key — and that modern LLMs, though they'll look almost comically crude in even five years, are a historically important technology. Language is our latent space, and that's what gives it its unreasonable power.

Recommend Gradient Ascendant to the readers of Sentiers

AI/ML research, applications, prospects, weirdness, cultural impact, history, etc.

Recommend Gradient Ascendant

AI/ML research, applications, prospects, weirdness, cultural impact, history, etc.

By Jon Evans · Launched 5 months ago

Why are you recommending Gradient Ascendant? (optional)

Recommendations are shown to readers after they subscribe to your publication. Learn more